Best Open-Source Vector Databases

Last updated: November 7 2024

Table of contents

- What are vector databases?

- Which one should you use?

- Performance benchmark

- Milvus

- Qdrant

- Weaviate

- Try your relational database

What the heck are we gonna do with all these AI embeddings?

- Every data engineer in the world the past 3 years

What are vector databases?

Vector databases are data systems that can efficiently store and retrieve highly dimensional arrays, or “vectors”. The recent AI boom has seen a surge in interest in these types of systems because transformers, the most hyped type of AI model, represent meaning with embeddings, which is just another word for a vector created by an AI model.

So basically, “embeddings” are multi-dimensional arrays, which can also be called “vectors”. They’re all the same thing for our purposes, so we’re just going to call them vectors from here on out.

Possibly the most important feature of a vector database is it’s ability to efficiently find vectors that are “close to each other”. The easiest way to understand this is to give an example.

Imagine a 2 dimensional space such as a map. For two points on a map to be considered close to each other, they would have to have similar X and Y values. How about a 3 dimensional space? They would need to have similar X, Y, and Z values. Scale this up to hundreds, or even thousands of embedding dimensions, and this is the type of problem that vector databases are designed to solve.

Which one should you use?

Below are the products that I believe are worth your time, based on the following criteria:

- Developer experience (docs, client libraries)

- Performance

- Would I bet on that database being actively developed in 5 years?

- Does the system easily scale to handle large amounts of data?

Here is a summary of my opinions on those criteria:

| Category | Milvus | Weaviate | Qdrant |

|---|---|---|---|

| Developer experience | Good | OK | Good |

| Performance | Great | Good | OK |

| Project stability | Good | OK | OK |

| Data scalability | OK | OK | Good |

Performance benchmark

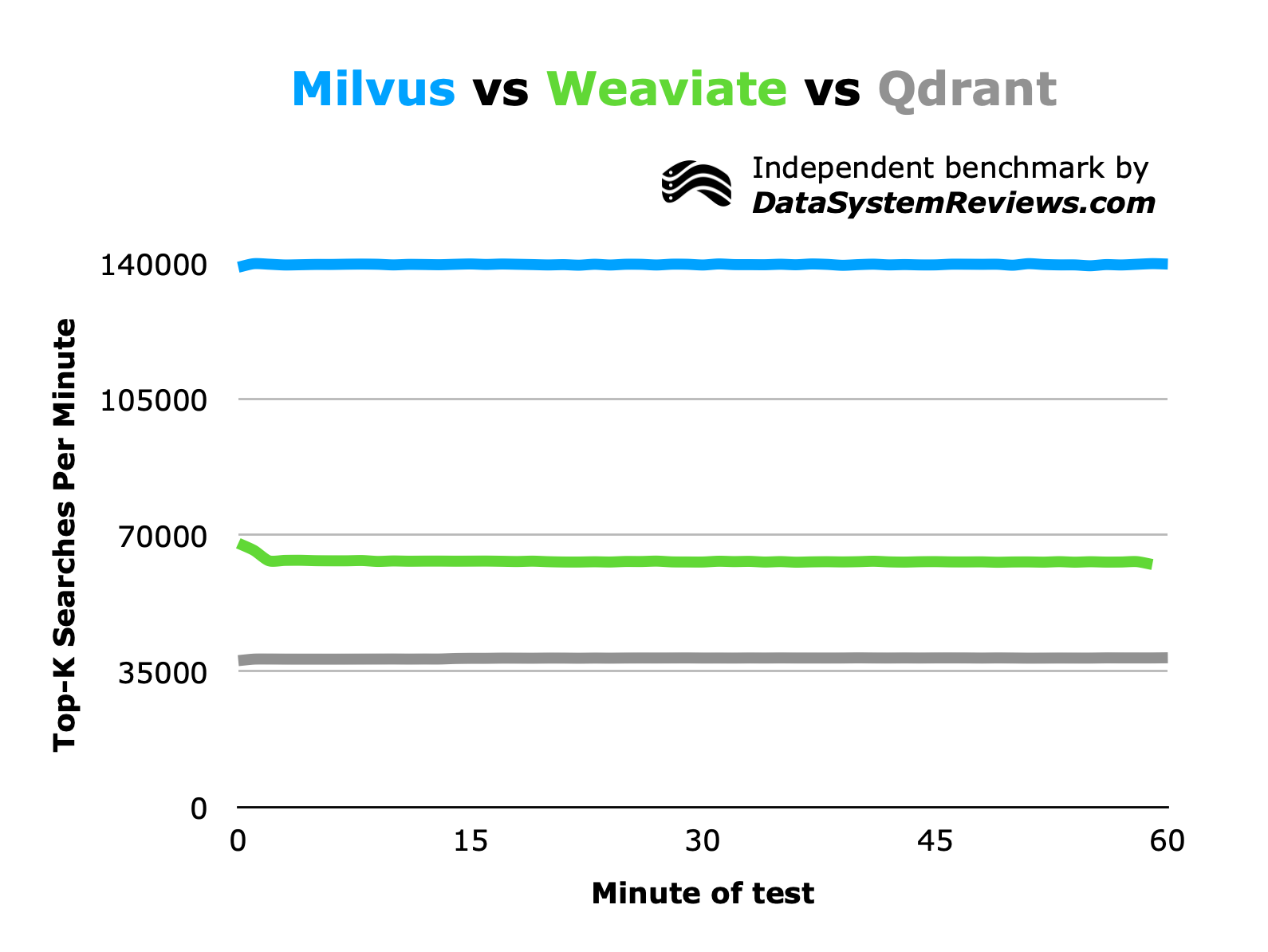

Here is a performance comparison based on my Searchly vector database benchmark. First, a minute-by minute chart:

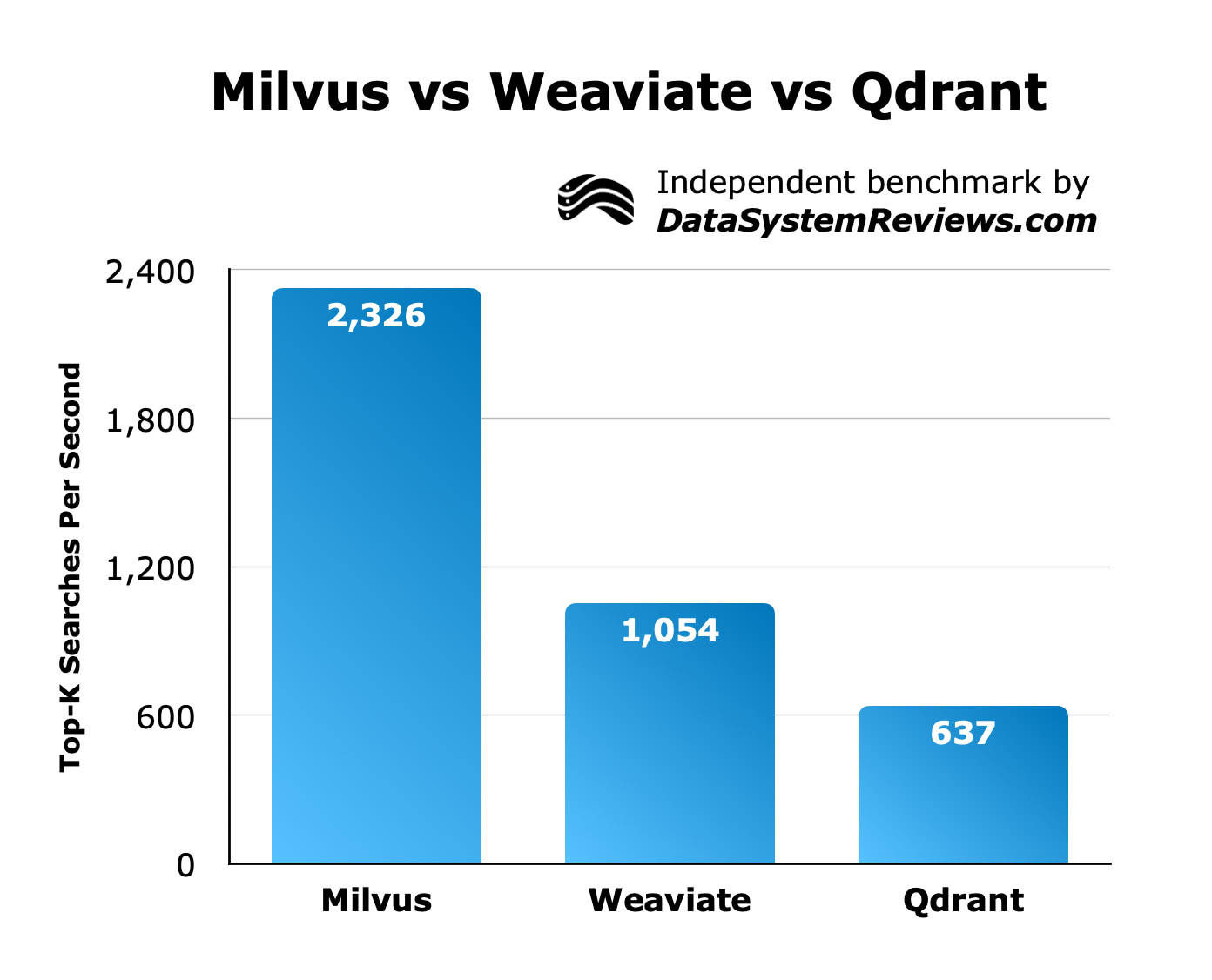

Next, a table that comes from the same data, but instead shows the amount of Top-K searches each database was able to serve, per second:

Milvus

Milvus is my favorite vector database overall. So long as your data fits in RAM, it has no downsides. However, if your data needs grow large and you don’t have significant resources to deploy it, it could become unwieldy.

Developer experience

Milvus has good documentation, a decent set of client libraries, and their website has a professional polish to it that inspires confidence in the project.

I build all of my benchmark applications in Go and I found Milvus’ Go client to be the best of the bunch. It was the client I found easiest to work with while building the Searchly benchmark program. The hello_milvus.go example was particularly helpful.

Deployment was relatively easy with the provided Docker Compose file, and I was surprised to learn that it has two dependencies: etcd and MinIO. I’m guessing Milvus uses etcd to store cluster configuration data and MinIO for data storage, but haven’t gotten a chance to dive into the architecture yet. I assume MinIO could be swapped for any S3 compatible API, as well.

Overall, the developer experience was good. Milvus has gotten a lot of things right, in my opinion.

Performance

Milvus was the fastest at serving my open-source Searchly vector database benchmark by a wide margin. With a million fake web pages vectorized and indexed, it was able to serve around 2,300 Top K searches per second on speedy-1.

Milvus is fast.

Project stability

Milvus is a graduated project in the Linux Foundation, which means it has a degree of separation from Zilliz, the company attempting it to monetize it. This is a great signal of project stability, in my opinion.

We all know that VC backed tech startups have a track record of going belly-up, so whenever a project is stewarded by a non-profit organization, it gives me additional confidence that it isn’t just a money grab that will die whenever funding runs out.

I think Milvus is here to stay, even if Zilliz goes under.

Scalability

Unfortunately, Milvus has an achilles heel. It has to load the entirety of a collection into RAM to perform vector similarity searches. This means that as your data scales, you’re going to need machines with significant amounts of RAM.

To be honest, this is baffling to me. If they just figured out how to spill searches to disk when required, Milvus would be my favorite vector database by a mile. Even if offering this feature requires tradeoffs sub-optimal from a technical and performance standpoint, it’s important for that option to exist.

Milvus can write indexes to disk (and I assume search them) with the DiskANN algorithm, and in fact that is the default behavior… But not the collections themselves.

Qdrant

Qdrant is my second favorite vector database. It has a good developer experience and solid scalability characteristics, but lags behind Milvus in performance.

Developer experience

Qdrant’s docs were my favorite of the bunch. They are the only vector database I reviewed that:

- Lists simple commands to get up and running with their Docker image on their Dockerhub page.

- Plainly shows how to make API calls to query some data.

Here’s how you run it:

docker run -p 6333:6333 qdrant/qdrant

And here’s how you query it:

curl -X POST \

'http://localhost:6333/collections/collection_name/points/search' \

--header 'api-key: <api-key-value>' \

--header 'Content-Type: application/json' \

--data-raw '{

"vector": [

0.2,

0.1,

0.9,

0.7

],

"limit": 1,

"filter": {

"must": [

{

"key": "city",

"match": {

"value": "London"

}

}

]

}

}'

Boom. That’s all I wanted to see, and it was easy to find. Everyone else, take note please.

Also, their Go client Github page had good examples that made it easy to get started.

Performance

Despite being my favorite vector database to work with, and the one with the best scalability characteristics, Qdrant was the slowest at serving my open-source Searchly vector database benchmark. With a million fake web pages vectorized and indexed, it was able to serve around 640 Top K searches per second on speedy-1.

Now, this might actually be plenty fast for you. So, if you don’t anticipate huge amounts of search demand, or if you can invest more into configuring / deploying Qdrant than I have here, the positive developer experience and scalability features might be worth the performance tradeoff. However, there is no denying that it is significantly slower than the other two systems, in my tests.

Project stability

Qdrant is not stewarded by a non-profit foundation. It is open-source, which is great, but I always question whether anyone will continue developing a project in the future if the VC-funded organization behind it goes under.

It’s up to you if you want to make Qdrant an irreplaceable component of your company’s tech stack. It is open-source, so you will always be able to run whatever workload you need on self-managed infrastructure.

Scalability

Qdrant, unlike Milvus, can utilize the disk for searches on collections that are largely than your system’s memory. This can lead to performance tradeoffs, but it’s really important to me that the option is there in the first place.

Also, they allow you to store HNSW indexes on disk if they grow too large! Out of the vector databases I reviewed, they are the only one that seems to be able to search collections that are larger than your system’s RAM, and spill HNSW indexes to disk.

Qdrant is, in my opinion, the vector database that is the easiest to scale, in terms of data volume stored.

Weaviate

Weaviate is another great vector database.

Developer experience

Like Milvus, Weaviate has good documentation, a decent set of client libraries, and their website looks great.

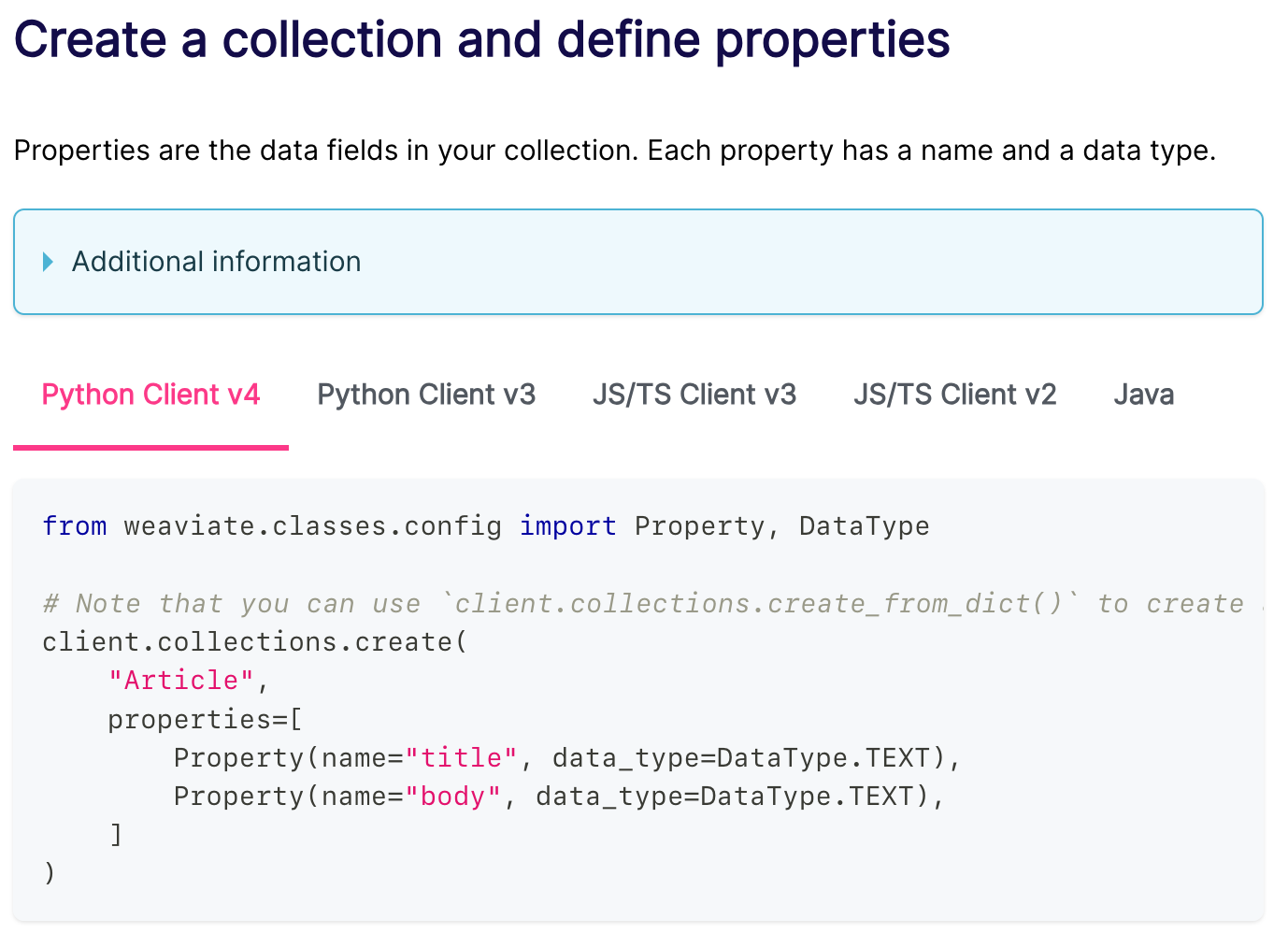

Unfortunately for me, Weaviate’s Go client was relatively less-well documented than the Python and Javascript / Typescript clients. For example, the section of the docs that specify how to create a collection and specify its properties (the equivalent of creating a table and specifying columns) did not show how to perform that operation in Go. Here is a screenshot:

As you can see, Python, Javascript, Typescript, and Java are all accounted for, but no Go 😢.

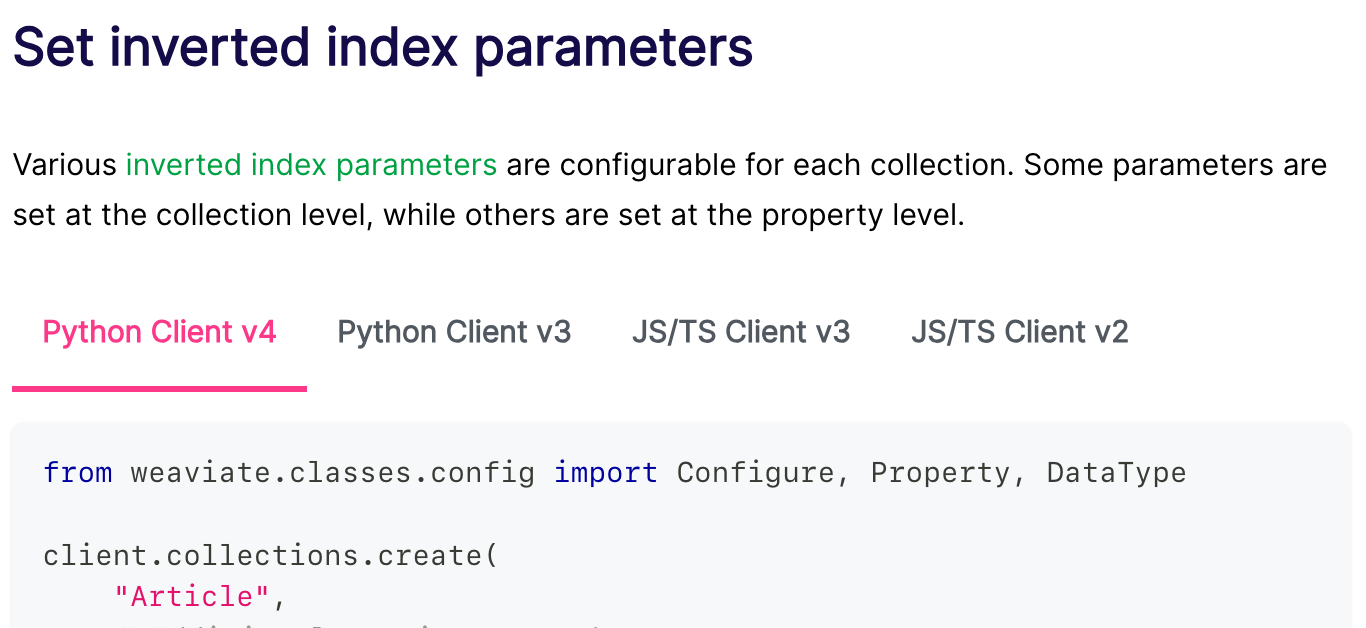

Sometimes even the Java client is under-documented, as in the section on setting inverted index parameters:

The docs are great if you’re using the Python or Javascript / Typescript clients. However, I can only report on my experience, and that was one of frustration when trying to communicate with Weaviate from a Go client.

At least deployment was easy with the provided Docker image. There were no dependencies.

Overall, the developer experience was just OK in my experience.

Performance

Weaviate was the second fastest at serving my open-source Searchly vector database benchmark. With a million fake web pages vectorized and indexed, it was able to serve around 1,050 Top K searches per second on speedy-1.

Importantly, it is also the fastest system that can also spill searches on larger-than-memory collections to disk. This is a very important distinction, despite not being the fastest overall.

Project stability

Weaviate is not stewarded by a non-profit foundation. It is open-source, which is great, but I always question whether anyone will continue developing a project in the future if the VC-funded organization behind it goes under.

It’s up to you if you want to make Weaviate an irreplaceable component of your company’s tech stack. It is open-source, so you will always be able to run whatever workload you need on self-managed infrastructure.

Scalability

Weaviate, unlike Milvus, can utilize the disk for searches on collections that are largely than your system’s memory. Apparently, this leads to quite serious performance tradeoffs, but it’s really important to me that the option is there in the first place.

However, I was not able to find any way to write vector indexes to disk with Weaviate, such as DiskANN. They have written a blog post on the topic, but I can’t find any evidence that they’ve actually implemented a solution in a released product, yet.

Try your relational database

The easiest way to get started storing and retrieving vectors is to just use your existing database.

For example, here are pages that list the vector capabilities of the following relational databases:

Vector functionality has been a priority for database vendors due to the AI boom. So, while traditional databases may lag behind vector-first databases in performance or advanced features, there’s a chance they will be good enough for your use case.

With that being said, vector-first databases are still your best bet for building scalable AI applications in the present and will probably remain so for the foreseeable future.