PostgreSQL VS MySQL Performance Comparison

Last updated: November 9 2024

Table of contents

In this article, I conduct original research into the performance of MySQL and PostgreSQL using Reserva, a custom benchmarking tool I created to simulate a high volume digital payments system.

PostgreSQL is 474% faster than MySQL in my most recent tests. Both systems are mature, modern, and performant databases, but I would recommend using PostgreSQL unless you have a specific reason for using MySQL.

Reserva

Reserva is a system I made to simulate a streamlined, high volume payments processing system. It is written in Go and supports arbitrary amounts of concurrency.

On start, Reserva loads all users and authentication tokens into memory, then attempts to make as many funds transfers as possible in a loop. Each funds transfer requires 4 round trips to the database and touches 6 tables. The workflow is as follows:

- DB is queried to authenticate and authorize the user who is requesting payment.

- DB is queried to get information about the card used and account to be debited.

- DB is queried to authenticate and authorize the user who will approve or deny the payment request.

- DB is queried to update both account balances and record the payment.

Reserva allows you to select whether or not you want deletes to be part of the workload. The results on this page do include deletes as part of the workload.

Benchmark setup

Let’s talk about the hardware, database versions, and configurations that were used to conduct this test.

Hardware

I plan on running a lot of database benchmarks in the near future, and constantly paying for cloud services was starting to get expensive. So, I decided to purchase a few mini PCs and a networking switch to simulate a modern cloud environment.

Also, I like buying computer stuff 😃.

CPUs

The mini PCs were 11th gen Intel NUCS. Specifically, they are:

- NUC11PAHi70Z00, which has an i7-1165G7 processor.

- NUC11PAHi50Z00, which has an i5-1135G7 processor.

I put the database servers on the computer with the i7 processor, because Reserva is less CPU intensive than the database programs it’s designed to test.

Both chips have 4 hyperthreaded cores for a total of 8 threads. This makes them comparable to an 8 vCPU VM that you might rent in the cloud. They also have a TDP of 28 watts, which I measured during load. In fact, these computers are well cooled and sometimes even surpass 28 watts!

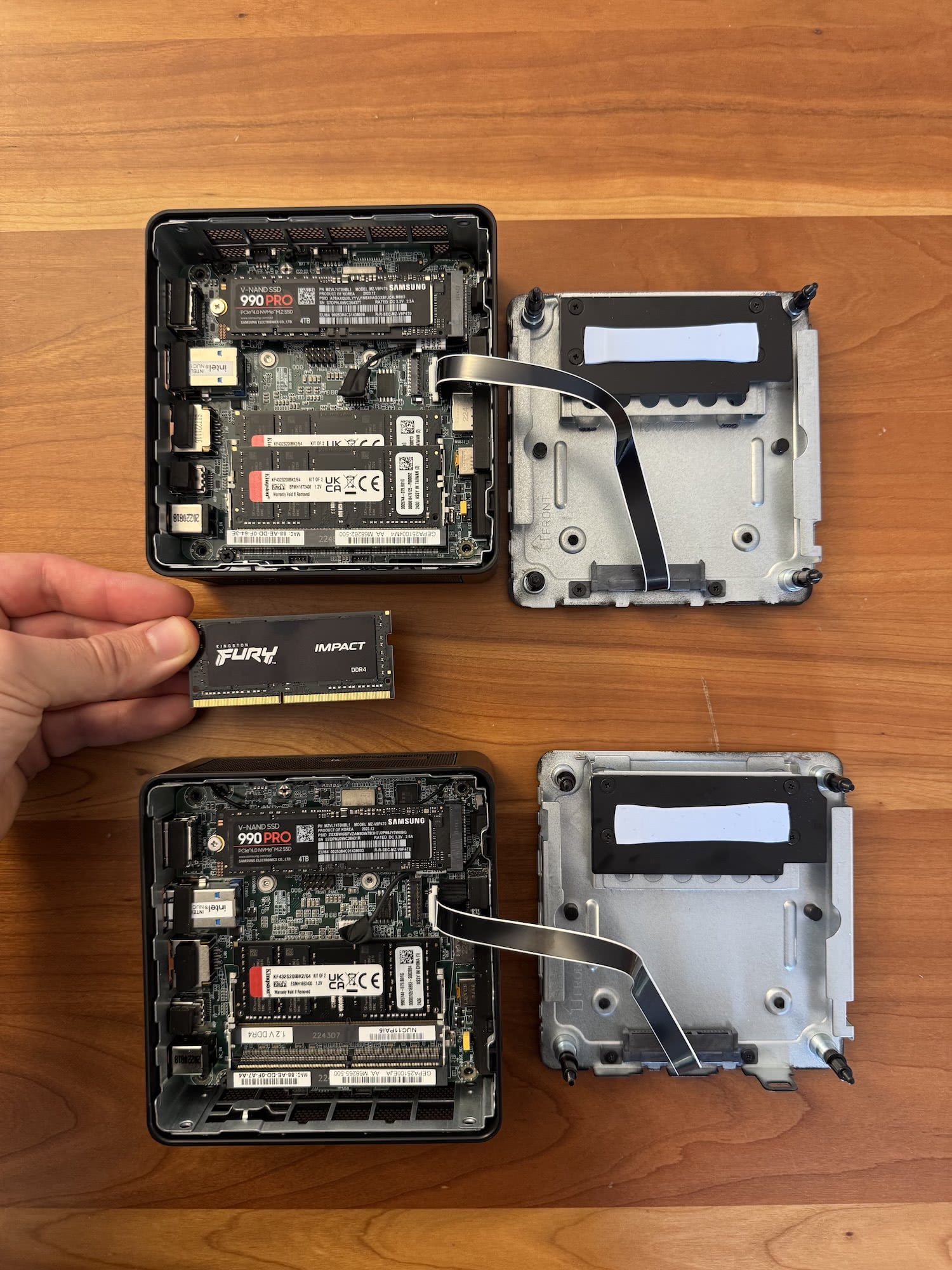

RAM / Disks

I purchased high quality RAM and SSDs to get the most out of these mini PCs. They are both outfitted with:

- 64 GB, dual-channel 3200 MHz Kingston Fury RAM

- 4 TB Samsung 990 Pro PCIe 4 SSD

It is well known that databases can become bottlenecked by slow disks, so I paid particular attention to my choice of SSD. The Samsung 990 Pro is, as far as I’m aware, one of the fastest PCIe 4 disks available on the market.

Networking

I initially conducted these tests using my M1 MacBook Air connected to one of the mini PCs via WiFi. However, I noticed right away how much that relatively poor connection slowed down the benchmark results. Over WiFi 6 with a strong connection, PostgreSQL was only able to complete 300-500 transfers per second. This was 30X slower than I eventually achieved!

So, I decided to go all in and buy another mini PC, plus:

- Netgear MS305 2.5 gigabit networking switch

- Rhino Cables cat6a ethernet cables.

This brought my network latency, as measured with ping, from several milliseconds down to around 0.65 milliseconds. This imperceptibly small difference in latency made a huge difference in my end test results. So, pay attention to networking, folks!

Comparable cloud instances

Here are a couple of comparable database servers with 8 vCPUs and 64 GB of RAM from AWS and Azure:

Before storage, it would cost about $846.72 per month to rent a similar machine on AWS.

Despite their size, these are powerful computers with resources that resemble a busy, production quality system.

Databases

Now, let’s discuss the databases used and how they were configured.

PostgreSQL config

I deployed the postgres:17.0-bookworm Docker image on the i7 mini PC.

I spent a whole day configuring performance parameters and found that, for this particular use case, the only one that made any real difference was shared_buffers.

The PostgreSQL docs recommend a value of around 25% of total system RAM for shared_buffers, so I used the following minimal postgresql.conf file:

listen_addresses = '*'

shared_buffers = 16GB

MySQL config

I used the mysql:9.1.0-oraclelinux9 Docker image to deploy MySQL. After quite a few tests, I found that the only setting that actually improved performance was setting innodb_buffer_pool_size to the recommended size of 75% of system RAM. As such, I used the following configuration file:

[mysqld]

innodb_buffer_pool_size = 48G

Populating data

For each database, I ran a DB preparation SQL scripts to create:

- 50 banks with access to the payments system and corresponding users + permissions + auth tokens

- 1,000,000 accounts, each with a corresponding payment card

You can see the SQL setup scripts, along with the rest of the code, on Reserva’s Github page.

The test

I ran Reserva with the following settings for both databases:

- 64 concurrent requests at a time

- Maximum of 64 open database connections (idle or active)

- 2 hour duration

- Deletes enabled as part of the workflow

Aggregation query

At the end of the test, I executed the following queries to show how many payments were made. The results of those queries are visualized below.

PostgreSQL query

SELECT

DATE_TRUNC('minute', CREATED_AT) AS MINUTE,

COUNT(*) AS NUMBER_OF_TRANSFERS

FROM

TRANSFERS

GROUP BY

MINUTE

ORDER BY

MINUTE;

MySQL query

SELECT

hour(CREATED_AT) AS hour_created_at,

minute(CREATED_AT) AS minute_created_at,

COUNT(*) AS NUMBER_OF_TRANSFERS

FROM

transfers

GROUP BY

hour_created_at, minute_created_at

ORDER BY

hour_created_at, minute_created_at;

Results

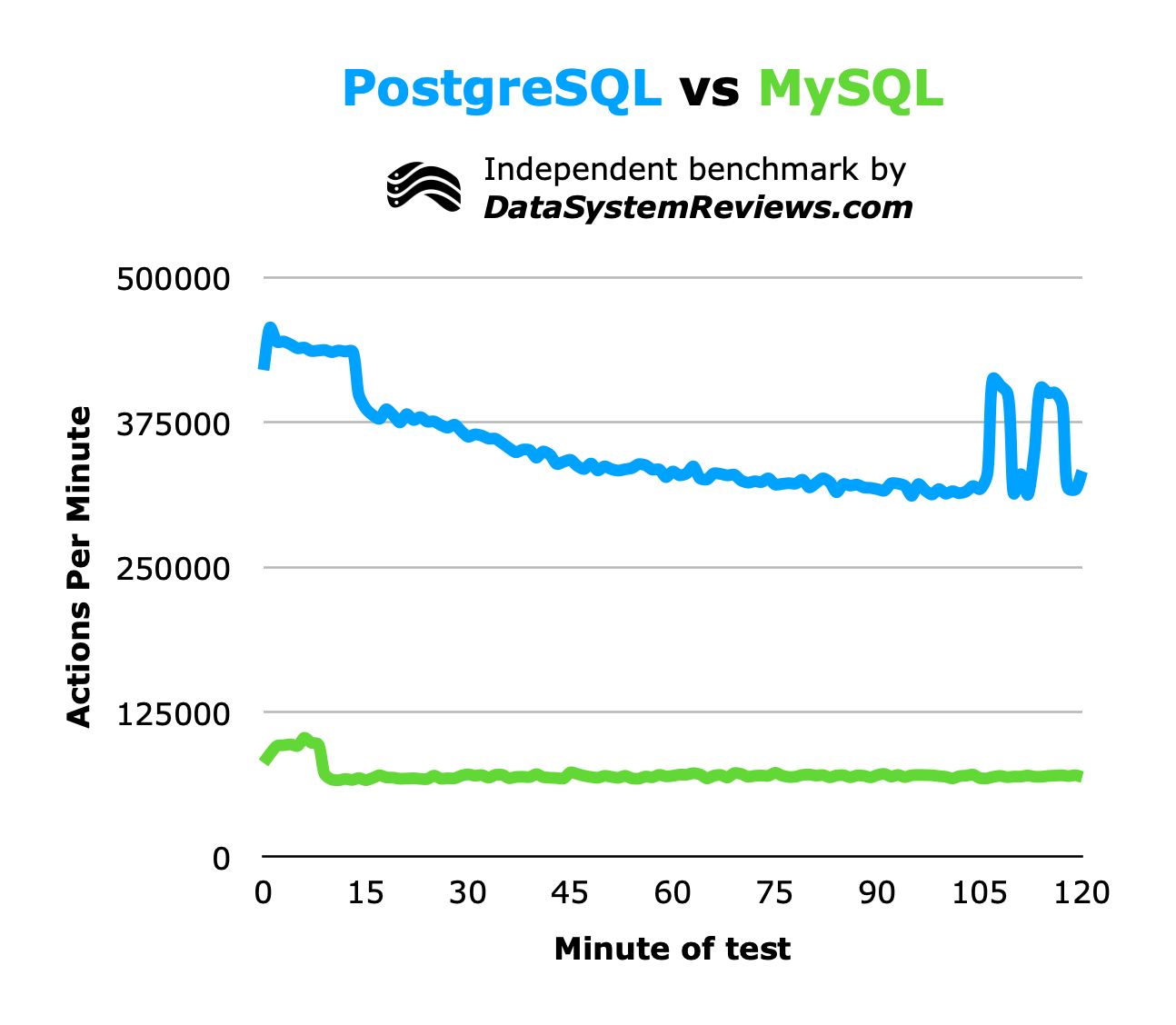

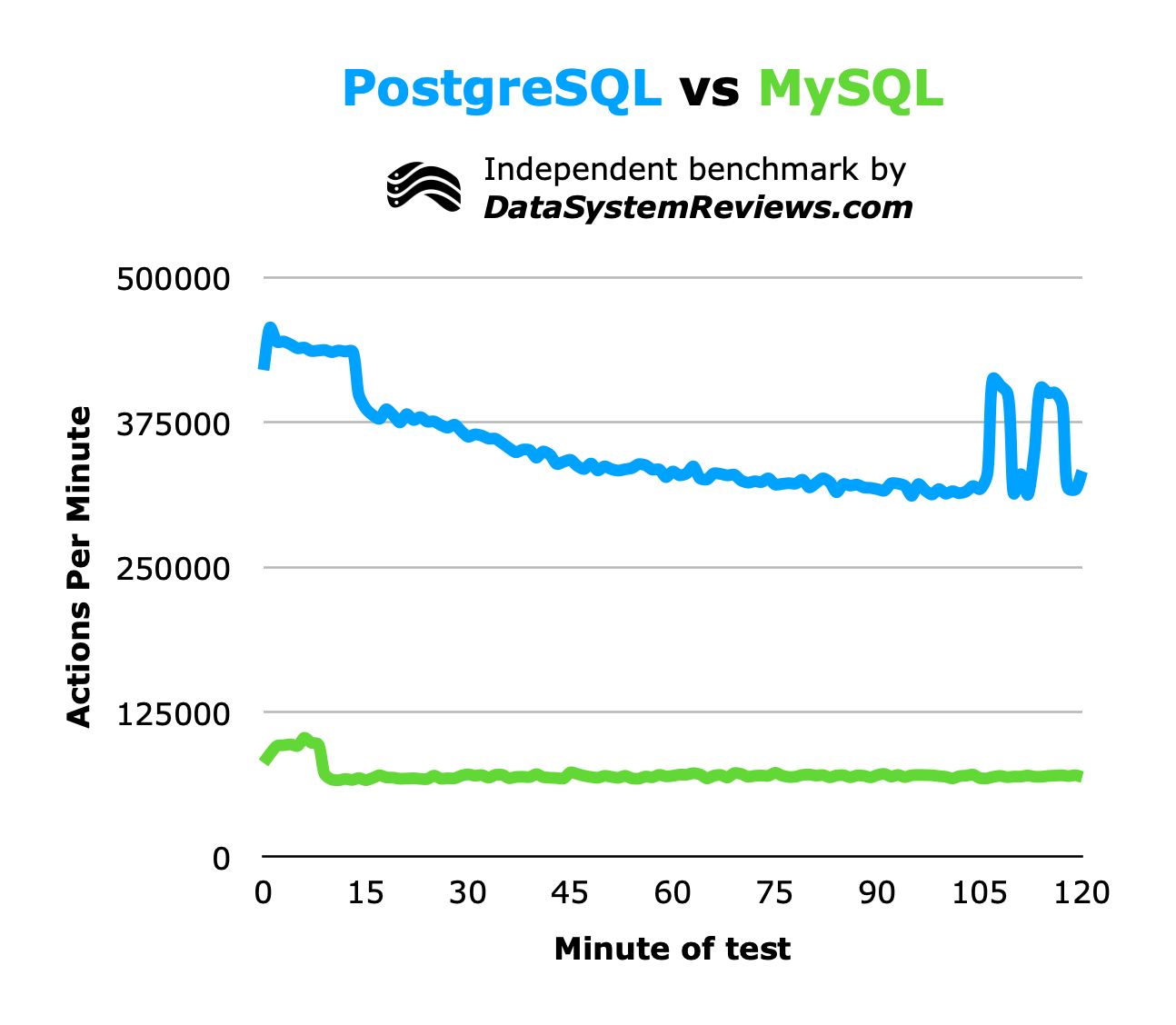

As you can see, PostgreSQL far outperformed MySQL. In fact, I was surprised how much faster it was than MySQL.

Of course, the tests of both databases used an identical version of Reserva, but I am constantly making changes to the program to improve its ability to test databases. Maybe some change I made resulted in PostgreSQL’s dominance? I guess that will play out in the near future as I perform more tests with it.

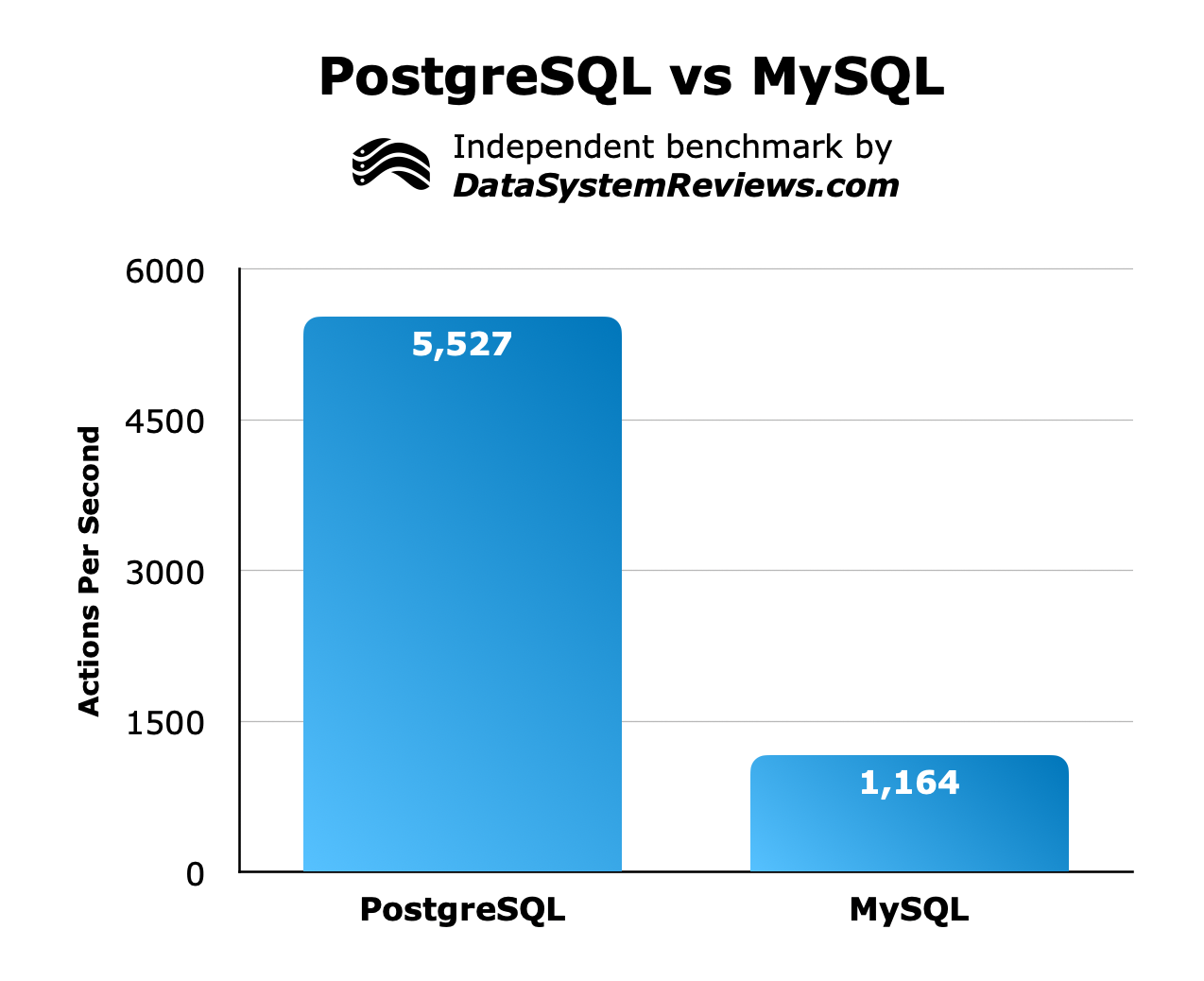

Here is another way of viewing the test results, where I’ve simply summed the amount of payments made over the last 60 minutes of the test, and divided by 60 to put the results in a per second format:

Conclusion

PostgreSQL left MySQL in the dust. It is actually surprising how much faster it was is than MySQL, considering that some major tech organizations like Uber have written blog posts expressing their preference for MySQL, for performance reasons.

I would use PostgreSQL for new projects if that option was available to me. For any existing workload that uses MySQL, you might see a large performance bump by switching to MariaDB.